SurréARisme -- Exploring Perceptual Realism in Mixed Reality using Novel Near-Eye Display Technologies

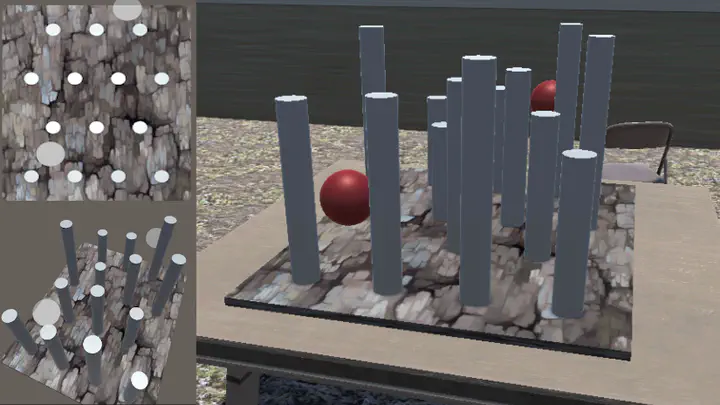

We aim at studying how the modification of rendering in AR could influence users’ perception.

We aim at studying how the modification of rendering in AR could influence users’ perception.SurréARisme is a PHC SAKURA project (2022-2023) funded by the French Ministries of “Europe and Foreign Affairs” and of “Higher Education and Research” and by the “Japan Society for the Promotion of Science” (JSPS) between École Centrale de Nantes (Jean-Marie NORMAND), the University of Tokyo (Yuta ITOH) and IMT Atlantique (Étienne PEILLARD and Guillaume MOREAU).

Summary:

The objective of this project is to be able to better characterize human perception in the context of Mixed Reality (MR) as well as, based on this characterization, propose new ways of interacting with virtual content in Mixed Reality.

MR is a research field that encompasses Virtual Reality (VR), where users are immersed in fully digital environments with which they can interact with in real-time as well as Augmented Reality (AR) that aims at extending our real world by feeding virtual information to human perception [1].

A typical scenario involving MR consists in overlaying virtual images or objects into the user’s view via near-eye displays implemented through various technologies, varying efficiency and form factors. Such displays have semi-transparent (in the case of AR) or opaque (for VR and some cases of AR) Head-Mounted Displays (HMDs) with cameras, projectors. More complex systems can also use lasers, and other optical elements.

One of MR’s goals is to make virtual elements visible to the user as immediately and easily perceptible and interactable as in real life [2]. From this point of view, it is important to be able to precisely characterize users’ perception of the virtual content displayed in MR devices [3]. This includes studying the importance of the appearance of virtual objects in comparison to that of real ones. Indeed, realism is especially important for perception [4]. The lack of realism in MR displays, as well as limitations in the rendering of virtual objects due to the MR hardware’s technical constraints generate biases, deteriorate perception, interaction, and understanding of MR environments. As a consequence, improving rendering of virtual objects and approaching “real” realism is a major issue for MR experiences [5]. On the other hand, our perceptual realism is not always consistent with physical realism. Our first objective is to improve the displays and rendering of MR experiences. Complete solutions that can give rise to realism in MR are not foreseeable very soon. We propose exploring ways to go “beyond” realism to improve users’ perception and efficiency in MR. MR combines complex optical displays and rendering paths to integrate virtual objects in a non-realistic way. Such virtual objects can nevertheless be more adapted to the task than realistic renderings.

Both from a technological and a cognitive point of view, all of these subjects have been partly studied to theoretically model discrepancies between reality and what is perceived in MR. Eventually, thanks to our combined expertise, we can combine hardware (especially optical elements, Japanese side), software, and human perception perspective (French side) to provide more perceptually acceptable MR renderings. Our second goal, based on our advances regarding the perceptual characterization of MR’s rendering of virtual objects, is to explore ways of improving the interaction in MR. Indeed, in the MR context, interaction consists of being able to select and manipulate virtual objects. Interaction can thus be considered as the final step in the perception-action loop [6] in MR where users have to perceive the virtual content before being able to decide which actions they need to perform and how to do so.

As a consequence, we aim at proposing experimental protocols taking advantage of our team’s expertise in MR rendering technologies (Japan side) and experimental design and data analysis (French side) to implement and conduct user experiments characterizing new interaction techniques to improve human perception in MR. We tackle this question via perceptual evaluation of AR renderings to select the main research axes we need to focus on. Our studies can specify required display specifications with optical design. Besides, the studies can assess if common technical aspects to date are overestimated. We address this question by conducting classical user studies to evaluate AR renderings’ quality from a perceptual point of view.

In summary, we aim at further characterizing human perception in the context of Mixed Reality where virtual content is merged with real images and presented to users. This will be achieved in a two-step approach. First, by proposing advances in terms of MR displays, in particular regarding Optical See-Through (OST) near-eye displays (expertise of the Japanese team) and second by design and performing user experiments allowing to precisely characterize the drawbacks and benefits of such MR devices (expertise of the French team). Another objective is to improve interaction in MR context, based on the knowledge gained regarding perception in MR.

Bibliography:

[1] P. Milgram, et al. “Augmented reality: A class of displays on the reality-virtuality continuum.” Telemanipulator and telepresence technologies. Vol. 2351. International Society for Optics and Photonics, 1995.

[2] Ma, Jung Yeon, and Jong Soo Choi. “The Virtuality and Reality of Augmented Reality.” Journal of multimedia 2.1 (2007): 32-37.

[3] Drascic, D., & Milgram, P. (1996, April). Perceptual issues in augmented reality. In Stereoscopic displays and virtual reality systems III (Vol. 2653, pp. 123-134). International Society for Optics and Photonics.

[4] Rademacher, P., Lengyel, J., Cutrell, E., & Whitted, T. (2001). Measuring the Perception of Visual Realism in Images. 235-247.

[5] M. Slater, et al. “The ethics of realism in virtual and augmented reality.” Frontiers in Virtual Reality 1 (2020)

[6] Goldstein, B. E., & Brockmole, J. R. (2017). Introduction to Perception. In Sensation and Perception (Tenth Edit, pp. 3–19).

[7] J. Zhao, et al. (2016). Energy-based generative adversarial network. arXiv preprint arXiv:1609.03126.